Amazon Web Services(AWS)

Tuesday, February 4, 2020

AWS Load Balancer

Saturday, January 11, 2020

AWS CloudFront

Wednesday, January 8, 2020

AWS Route 53

Monday, January 6, 2020

AWS Relational Database Service -RDS

Friday, January 3, 2020

AWS Auto Scaling

Thursday, January 2, 2020

AWS Elastic Compute Cloud -EC2

Monday, December 23, 2019

AWS Simple Storage Services

Before we dive into AWS S3 (Simple

Storage Service), it’s important to understand the basics of storage types:

Block Storage, File Storage, and Object Storage. Having a clear understanding

of these will help you appreciate how AWS S3 works and why it’s a popular

choice for cloud storage.

A. Storage

Types

Storage is typically divided into three

major types.

A.1 Block Storage

Block storage splits data into fixed-sized blocks. When you update data, only the affected block is modified, making it fast and efficient. It offers the lowest latency, highest performance, and is highly redundant, making it ideal for transaction databases, structured databases, and workloads with frequent random read/write operations.

Best Use Cases: Transaction databases, structured data storage, and scenarios requiring high performance and low latency.

A.2.File Storage

File storage organizes data as

individual files in a hierarchical folder system. Each file has its own

metadata (e.g., name, creation date, modified date). While it works well with a

limited number of files, performance can drop significantly as the volume of

files grows. Searching, updating, and managing files becomes cumbersome with

large-scale data due to the nested directory structure.

Best Use Cases: Shared file systems, document management, and

scenarios where hierarchical organization of files is preferred.

A.3.Object Storage

Object storage was created to address the

scalability limitations of file storage. Instead of a hierarchical structure,

object storage uses a flat address space, where each file is stored as a

separate object, along with its metadata and a unique identifier. This design

allows it to scale efficiently and is a perfect fit for distributed storage

systems. Object storage is highly scalable, cost-effective, and typically

accessed via APIs or command line, as it cannot be mounted directly as a drive

on a virtual server.

Best Use Cases: Storing unstructured data (e.g., media files, backups), scalable storage solutions, and cloud-native applications.

B. Distributed

architecture

In a distributed architecture, data processing and storage are not confined to a single machine. Instead, the workload is spread across multiple independent machines that work together, often located in different geographic locations and connected via a network. This setup brings several key advantages: it enhances system scalability, improves fault tolerance, and ensures high availability. If one machine fails, others can take over, minimizing downtime and data loss. This distributed approach is a fundamental aspect of modern cloud services like AWS S3, allowing them to handle massive amounts of data reliably and efficiently, regardless of location.

C.

Simple Storage Service(S3)

AWS S3 (Simple Storage Service) is Amazon's

cloud-based storage solution, designed to handle massive amounts of data using

an object-based storage system. S3 is built on a distributed architecture,

meaning your data isn’t stored in one single place but is spread across

multiple AWS locations for better reliability and accessibility. This setup

ensures that your files are safe and can be accessed quickly, even if there’s a

hardware failure in one location.

You can easily store or retrieve any

amount of data with S3 using various methods like the AWS Console, API, or

SDKs. One of the biggest advantages of S3 is its scalability—there’s no limit

to the number of objects you can store, and each object can range in size from

as little as 0 bytes to a massive 5 terabytes.

C.1 Bucket

In AWS S3, a Bucket is

essentially a flat container where all your files (or objects) are stored.

Think of it as a big folder for your data, but without any hierarchical

structure. To start using S3, the first step is creating a Bucket, and it's

important to note that Buckets are region-specific, meaning they are created in

a specific AWS region.

Here are some key facts about S3

Buckets:

Flat Structure with Logical Folders: While Buckets don’t have a traditional folder structure, you can create logical "folders" inside them for better organization.

Unlimited Storage: You can store an unlimited number of objects in a Bucket, so you don't need to worry about running out of space.

No Nested Buckets: Buckets cannot contain other Buckets; they can only hold objects (files).

Bucket Limit: By default, you can create up to 100 Buckets per AWS account. However, this limit is flexible and can be increased if needed.

Ownership: Once you create a Bucket, you own it, and the ownership cannot be transferred to another account.

Unique Names Globally: Every Bucket name must be globally unique across all AWS accounts and regions. If someone else has already used a name, you’ll need to choose a different one. However, once a Bucket is deleted, the name becomes available for reuse.

No Renaming: You cannot rename a Bucket once it’s created, so choose your name wisely.

Naming Conventions: Bucket names must follow specific rules. They can only include lowercase letters, numbers, hyphens, and must be between 3 and 63 characters long.

C.2 Object

AWS S3 objects are the core units of data storage within S3 Buckets, each with unique characteristics and identifiers.

Object Size: Each object in S3 can range from as small as

0 bytes up to a massive 5 terabytes, making S3 flexible for storing

anything from tiny data snippets to large files.

Unique Access ID (Key): Every object in S3 is assigned a unique identifier known as an object key or name, which is how you access and manage specific files within a Bucket.

Unique Identification Properties: To access an object in S3, you can use a combination of properties that ensures each object is uniquely identifiable:

ü Service Endpoint: The URL that directs you to the S3 service.

ü Bucket Name: The specific Bucket where the object is stored.

ü Object Key (Name): The unique name you assigned to the object within the Bucket.

ü Object Version (Optional): If versioning is enabled, this identifies specific versions of the object.

· Region-Specific Storage: Since each Bucket is region-specific, the objects within it are also stored in that specific AWS region. Unless you deliberately transfer them or enable cross-region replication, objects stay within their initial region. Cross-region replication, which allows data to be automatically copied to another region, will be covered in more detail later in this blog.

· High Data Durability: AWS S3 is designed with high durability in mind. Each object stored in S3 is redundantly stored across multiple facilities within the same region, offering reliable, long-term storage of data with minimal risk of loss.

C.3.Storage Classes

Now that you know what

Buckets and objects are, let's talk about what happens when you upload your

files (objects) to an S3 Bucket. AWS gives you the option to choose a Storage Class for each

object. But why does this matter? In any data environment, different types of

data serve different purposes. Some data needs to be accessed frequently, while

other data might be archived for long-term storage. To meet these varied needs,

AWS S3 offers multiple storage classes, each with its own balance of durability, availability,

and cost.

The basic idea is simple: if you need higher durability and availability, you’ll pay a higher cost. However, if you’re willing to compromise on how often or quickly you access the data, you can save money by choosing a less expensive storage class. Below, we’ll go over the different storage classes that AWS S3 offers and what makes each one unique.

C.3.1. S3-Standard

C.3.2 S3-Standrad Infrequent Access

C.3.3 S3 one Zone Infrequent Access

C.3.4 S3 Reduced Redundancy

C.3.5 S3 Intelligent Tiering

C.3.6 S3 Glacier

C.3.7 S3 Glacier Deep archive

C.3.1. S3 Standard

- Ideal for: Frequently

accessed data with high durability and availability requirements.

- Durability: 99.999999999%

(11 nines).

- Availability: 99.99% per

year.

- Redundancy: Three copies

of each object stored across multiple Availability Zones.

- Use Cases: Websites,

mobile apps, and data analytics where quick access is crucial.

C.3.2.

S3

Standard-Infrequent Access (S3 Standard-IA)

- Ideal for: Data that is

accessed infrequently but needs to be quickly available when required.

- Durability: 99.999999999%

(11 nines).

- Availability: 99.9% per

year.

- Minimum Storage Duration: 30 days

(you’ll be charged for at least 30 days even if the file is deleted

sooner).

- Minimum Billable Object

Size:

128 KB (files smaller than this are billed as 128 KB).

- Retrieval Costs: Per GB

retrieval charges apply.

- Redundancy: Three copies

stored across multiple Availability Zones.

- Use Cases: Backup and

disaster recovery, less frequently accessed data.

C.3.3.

S3 One

Zone-Infrequent Access (S3 One Zone-IA)

- Ideal for: Non-critical,

long-term data that can be easily reproduced if lost.

- Durability: 99.999999999%

(11 nines).

- Availability: 99.5% per

year.

- Minimum Storage Duration: 30 days.

- Minimum Billable Object

Size:

128 KB.

- Redundancy: Data is stored

in a single Availability Zone (not multiple), so there’s no protection

against zone failures.

- Use Cases: Easily

replaceable backups, non-critical data that can be recreated.

C.3.4.

S3 Reduced

Redundancy Storage (RRS)

- Status: Not

recommended by AWS anymore due to cost inefficiency.

- Durability: 99.99% (4

nines).

- Availability: 99.99% per

year.

- Redundancy: Three copies

across different Availability Zones.

- Use Cases: Previously

used for non-critical, frequently accessed data, but now largely replaced

by S3 Standard and One Zone-IA.

C.3.5.

S3

Intelligent-Tiering

- Ideal for: Data with

unpredictable access patterns; long-term storage with automatic cost

optimization.

- Durability: 99.999999999%

(11 nines).

- Availability: 99.9% per

year.

- Minimum Storage Duration: 30 days.

- Monitoring Fees: Additional

charges for monitoring and automatic tier changes.

- Redundancy: Three copies

stored across multiple Availability Zones.

- Use Cases: Data with

changing access patterns, where AWS automatically moves objects between S3

Standard and S3 Standard-IA to optimize costs.

C.3.6.

S3 Glacier

- Ideal for: Long-term

backups and archival storage, with occasional access.

- Durability: 99.999999999%

(11 nines).

- Availability: 99.99% per

year.

- Minimum Storage Duration: 90 days.

- Retrieval Time: Ranges from

minutes to hours, depending on the retrieval option selected.

- Free Retrieval: Up to 10 GB

per month is free.

- Redundancy: Three copies

across different Availability Zones.

- Use Cases: Compliance

archives, financial data, and long-term backups.

C.3.7.

S3 Glacier

Deep Archive

- Ideal for: Rarely

accessed data that must be preserved for long periods.

- Durability: 99.999999999%

(11 nines).

- Availability: 99.99% per

year.

- Minimum Storage Duration: 180 days.

- Retrieval Time: 12 to 48

hours.

- Cost Efficiency: Up to 75%

cheaper than S3 Glacier.

- Redundancy: Three copies

across different Availability Zones.

- Use Cases: Data retention for compliance, long-term archives, and infrequently accessed records.

Choosing the right AWS S3

storage class depends on your data access needs, durability requirements, and

budget. For frequently accessed data, go with S3 Standard. If access is infrequent, S3 Standard-IA is a

better choice. For non-critical, replaceable data, S3 One Zone-IA offers

a cost-effective solution. When you need to store archival data, S3 Glacier or S3 Glacier Deep Archive

are ideal options. Finally, if your access patterns are unpredictable, S3 Intelligent-Tiering

automatically manages data to help you save costs.

C.4 S3 Bucket Versioning

AWS S3 Bucket Versioning is a

powerful feature that allows you to keep multiple versions of the same object

in a Bucket. It acts as a safety net against accidental deletions or

overwrites, and is useful for data retention and archiving.

Key Features of

S3 Bucket Versioning:

1.

Automatic

Versioning: When

versioning is enabled, any time you overwrite an existing object, S3

automatically creates a new version instead of replacing the original. This way,

you can easily access older versions whenever needed.

2.

Delete

Markers: If you

delete an object in a versioned Bucket, S3 doesn’t permanently delete it.

Instead, it places a delete marker on the object. This hides the object

from standard listing but allows you to restore it by simply removing the

delete marker.

3.

Applies

to All Objects: Once

enabled, versioning applies to all objects in the Bucket, including both

existing and newly uploaded files.

4.

Retroactive

Protection: If you

enable versioning on an existing Bucket, it immediately starts protecting both

old and new objects by maintaining their versions as they are updated.

5.

Permanent

Deletion: Only the

Bucket owner has the authority to permanently delete any object, even when

versioning is enabled. This adds an extra layer of security against unintended

data loss.

6.

Versioning

States:

- Un-Versioned:

Default state when versioning is not enabled.

- Enabled: Actively maintains versions of objects.

- Suspended:

Stops creating new versions, but existing object versions remain intact.

7.

Suspending

Versioning: If you

suspend versioning, all existing versions are retained, but new updates to

objects won’t create additional versions.

8.

Default

Version Retrieval:

When you retrieve an object without specifying a version ID, S3 automatically

returns the most recent version by default.

Why Use Bucket Versioning?

Enabling versioning is

especially beneficial for:

- Data Protection: Prevents accidental

overwrites or deletions.

- Compliance and Archiving:

Retains historical versions of data for regulatory or audit purposes.

- Disaster Recovery:

Offers a straightforward way to restore previous versions in case of

unexpected changes.

In short, S3 Bucket

Versioning is an essential feature for robust data management and helps ensure

that your important files are safeguarded, even in the face of human error or

system failures.

C.5 S3

Bucket MFA Delete

AWS S3 offers MFA Delete, an extra

layer of security designed to protect your data against unauthorized deletions.

When MFA Delete is enabled, deleting any version of an object requires not only

the standard permissions but also a Multi-Factor Authentication (MFA) code.

This feature provides an added safeguard, ensuring that even if someone gains

access to your AWS account, they cannot delete your data without also having

access to your MFA device.

Key Points About MFA

Delete:

- Advanced Protection: Prevents

accidental or unauthorized deletion of critical data by requiring an MFA

code in addition to normal access credentials.

- Command Line or API Only: MFA Delete

cannot be enabled through the AWS Console; it must be configured via the

AWS CLI or API.

- Works with Versioning: MFA Delete is

only available if versioning is enabled on the Bucket, adding an extra

level of control for managing object deletions.

C.6. AWS S3

Consistency Levels: Understanding Data Consistency

Before diving into S3’s

consistency model, let’s first clarify what data consistency means. In a distributed

storage system like AWS S3, data consistency refers to how quickly changes to

data (such as updates, deletions, or writes) become visible to subsequent read

requests. There are two main types of data consistency:

1.

Strong

Consistency: Any

read request immediately reflects the most recent write operation. This means

that once you upload or update an object, any subsequent read requests will

always return the latest version.

2.

Eventual

Consistency:

After a write operation, there might be a brief delay before the changes are

visible. This is common in systems designed for high availability, where data

updates are propagated across multiple locations, and reads might temporarily

return older versions of the object until all copies are updated.

S3 Provides Strong

Consistency for New Objects (PUT):

When you upload a new object to S3, it offers strong (immediate) consistency.

This means that as soon as the upload is complete, any subsequent read requests

will return the latest version of the object.

S3 Offers Eventual

Consistency for Overwritten Objects: If you overwrite an existing object in S3, the system

may briefly exhibit eventual consistency. This means there might be a short

delay before the updated object is fully visible across all read requests.

S3

Offers Eventual Consistency for Deleted Objects: When you

delete an object from S3, it may take a brief period for the deletion to

propagate fully, resulting in eventual consistency. During this time, read

requests might temporarily return the deleted object.

C.7.

S3 Encryption: Keeping Your Data Secure

AWS S3 offers

robust encryption options to ensure your data is secure at rest. You can choose

between server-side

encryption and client-side encryption based on your security

needs.

C.7.1. Server-Side Encryption (SSE)

With

server-side encryption, data is encrypted by the S3 service before it is stored

on disk. This process is seamless and does not incur additional costs. There

are three main types of server-side encryption:

SSE-S3 (S3 Managed Keys): S3 handles the entire encryption

process using S3-managed keys. Your data is encrypted with AES-256 encryption,

and the master key is regularly rotated by AWS for added security.

SSE-KMS (AWS KMS Managed Keys): Data is

encrypted using keys managed by AWS Key Management Service (KMS). This option

provides additional control, allowing you to manage permissions and audit key

usage through AWS KMS.

SSE-C (Customer-Provided Keys): With SSE-C,

you provide your own encryption key. AWS uses this key to encrypt your data but

does not store the key. If you lose your key, you will not be able to access

your data, as AWS does not retain it.

C.7.2. Client-Side Encryption

In

client-side encryption, the encryption process is handled on the client’s side

before the data is uploaded to S3. This means the client encrypts the data

locally, and the encrypted file is then transferred to S3 for storage. This

approach gives the client full control over the encryption keys and process.

By using

either server-side or client-side encryption, you can ensure your data is

protected from unauthorized access, providing peace of mind for sensitive or

confidential information stored in AWS S3.

C.8. S3 Static Website Hosting

AWS S3 allows you to host a

static website easily, using the files stored in your S3 Bucket. This feature

is perfect for hosting simple websites with static content, such as HTML, CSS,

and JavaScript files.

Key Features of S3 Static Website Hosting:

· Automatic

Scalability:

S3-hosted static websites automatically scale to meet traffic demands. There’s

no need to configure a load balancer or worry about traffic spikes.

· No

Additional Hosting Costs:

There are no extra charges for hosting static websites on S3. You only pay for

the S3 storage and data transfer costs.

· Custom

Domain Support:

You can use your own custom domain name with an S3-hosted website, making it

easy to integrate with your existing branding.

· HTTP

Only: S3 static

websites do not natively support HTTPS. They can only be accessed via HTTP.

However, you can use AWS CloudFront to enable HTTPS for your site.

S3 Static Website URL Formats:

S3 provides two main URL

formats for accessing your static website:

·

Format

1:

http://<bucket-name>.s3-website-<aws-region>.amazonaws.comExample: http://mybucket.s3-website-eu-west-3.amazonaws.com

·

Format

2:

http://<bucket-name>.s3-website.<aws-region>.amazonaws.com

Example: http://mybucket.s3-website.eu-west-3.amazonaws.com

C.9.

Pre-Signed URLs: Granting Temporary Access in S3

AWS S3’s Pre-Signed URLs feature

allows you to give temporary access to specific objects in your Bucket, even to

users who don’t have AWS credentials. This is useful when you want to securely

share files without making your entire Bucket public.

Key Features of Pre-Signed URLs:

1. Temporary Access: Pre-Signed URLs come with an

expiration time. You set the expiry date and time when creating the URL, after

which it becomes invalid.

2. Flexible Access: You can create Pre-Signed URLs for

both downloading and uploading files. This makes it a convenient way to share

files or allow temporary uploads without exposing your AWS credentials.

3. Secure and Controlled: Since the URL is only valid for a

specified time, it provides secure, limited-time access to the object. You

control who gets access and for how long.

Example Use Cases:

- File Sharing: Sharing large

files like reports, photos, or videos securely without needing to give AWS

account access.

- Temporary Uploads: Allowing users to upload files to your S3 Bucket without exposing write permissions.

AWS S3’s Cross-Region Replication (CRR) is a powerful

feature that enables automatic, asynchronous copying of objects from one Bucket

to another Bucket in a different AWS region. This helps increase data

availability, meet compliance requirements, and reduce latency for users

accessing your data from different geographical locations.

Common

Use Cases:

- Low Latency Access: By

replicating data to a region closer to your end users, you can reduce

latency and improve access speeds.

- Compliance Requirements:

Many regulations require storing data in multiple geographic locations for

redundancy and disaster recovery.

Key

Features of Cross-Region Replication:

1. Versioning

Requirement: To use CRR, you must enable versioning

on both the source and destination Buckets. This ensures that all versions of

the object are replicated.

2. Single

Destination Bucket: Replication can only be configured to send objects

to one destination Bucket per replication rule.

3. Cross-Account

Replication: If you are replicating objects across different AWS

accounts, the owner of the source Bucket must have the necessary permissions to

replicate objects to the destination Bucket.

4. Replication

of Tags: Any tags associated with the object in the source Bucket are

also replicated to the destination Bucket.

5. Encryption

Limitations: Objects encrypted with SSE-C (Server-Side

Encryption with Customer-Provided Key) and SSE-KMS-C

(Server-Side Encryption with KMS Key) cannot be replicated due to

security restrictions.

6. No Replication of Replicated Objects: Objects that were originally created as replicas through another CRR process are not replicated again in the destination Bucket.

AWS S3 Transfer Acceleration is a feature designed to speed

up the upload process for objects, especially when the user is located far from

the S3 Bucket’s region. For example, if your S3 Bucket is located in the USA

and you are uploading data from India, the upload time can be significant due

to network latency. S3 Transfer Acceleration reduces this time by leveraging

AWS’s global CloudFront network.

Key

Features of S3 Transfer Acceleration:

1. Improved

Upload Speeds: By enabling S3 Transfer Acceleration, your upload

requests are routed through AWS CloudFront’s edge locations. This provides a

faster and more optimized path to the S3 Bucket.

2. Cannot

Be Disabled, Only Suspended: Once you enable S3 Transfer Acceleration,

it cannot be fully disabled. However, you can suspend it if needed.

3. Optimized

Data Transfer: Data is not stored at the CloudFront edge location.

Instead, it’s quickly forwarded to the destination S3 Bucket using an optimized

network path, reducing latency.

4. Not

HIPAA Compliant: S3 Transfer Acceleration does not meet HIPAA

compliance standards, so it should not be used for sensitive healthcare data.

Example

Use Case:

If you frequently upload large files from geographically distant locations, S3 Transfer Acceleration can significantly reduce transfer times, making it ideal for scenarios like media uploads, global file sharing, and remote backups.

AWS S3 provides flexible options for controlling access to your Buckets and

objects. You can set permissions to determine who can view or modify your data,

helping you maintain security and compliance.

Ways

to Grant Access in S3:

1. Individual

Users: You can grant specific permissions to individual users within

your AWS account using IAM (Identity and Access Management) policies. This is

useful for controlling access at a granular level based on user roles.

2. AWS

Accounts: You can share access to your S3 resources with other AWS

accounts. This is ideal for scenarios where you need to collaborate with

external partners or teams who have their own AWS accounts.

3. Public Access: You can choose to make your S3 Bucket or specific objects publicly accessible. This setting is typically used for static website hosting or when sharing files that don’t require restricted access. However, enabling public access should be done with caution to prevent unauthorized data exposure.

4. All Authenticated Users: This option allows access to any user with AWS credentials. It’s broader than granting access to individual users but still requires the user to be authenticated with an AWS account. This setting is useful for shared resources within the AWS ecosystem but should be carefully managed.

AWS S3 provides URLs for accessing Buckets and objects via the SDK, API, or

browser. There are two main styles for accessing S3 resources: Virtual-Hosted-Style

URLs and Path-Style URLs. Let’s look at both formats.

1.

Virtual-Hosted-Style URLs

In the virtual-hosted style, the Bucket name is part of the domain name.

This is the preferred method as it aligns with modern web standards.

Format:

https://<Bucket-Name>.s3.<Region-Name>.amazonaws.com/<Object-Name>Example:

https://mybucket.s3.us-east-1.amazonaws.com/object.txt- This

format is commonly used and is supported by most AWS services and tools.

- It’s

easier to configure for custom domains and works well with S3 features

like static website hosting.

2.

Path-Style URLs (Deprecated)

In the path-style format, the Bucket name appears after the S3 endpoint in

the URL. Note that AWS has deprecated support for path-style URLs as of

September 30, 2020, except for the "us-east-1" region (N. Virginia).

Format:

https://s3-<Region-Name>.amazonaws.com/<Bucket-Name>/<Object-Name>Example:

https://s3-us-east-2.amazonaws.com/mybucket/object.txt- This

format was used historically, but it is no longer recommended.

- Path-style

URLs are only supported in specific scenarios (e.g., the

"us-east-1" region), and AWS suggests transitioning to

virtual-hosted-style URLs.

Important Notes:

- Region-Specific URLs: If

your Bucket is in the "us-east-1" region, you do not need to

include the region name in the URL.

- Path-Style Deprecation: AWS officially ended support for path-style URLs on September 30, 2020. It’s recommended to use virtual-hosted-style URLs for compatibility and future-proofing.

AWS S3’s Multipart Upload feature

is designed to handle the upload of large objects by splitting them into

smaller parts that can be uploaded independently and in parallel. This makes it

easier to upload files larger than 5

GB, which cannot be uploaded in a single PUT request. Multipart

upload is highly recommended for any object larger than 100 MB, although it can

be used for objects as small as 5

MB.

Key Features of Multipart Upload:

1. Parallel Uploads: Each part of the file is uploaded

independently and in parallel, significantly increasing upload speed and

improving throughput.

2. Error Handling: If an upload fails for a specific

part, only that part needs to be re-uploaded, rather than restarting the entire

process. This feature enhances the reliability of the upload process.

Recommended Use Cases:

- Large File Uploads: For files larger than 100 MB, multipart

upload is recommended to ensure efficient and reliable transfer.

- High-Throughput Requirements: By uploading parts in parallel,

multipart upload can achieve higher upload speeds, especially for large

objects.

- Improved Error Recovery: Multipart upload simplifies error

recovery. If a part fails, you only need to retry the failed part, making

the process more resilient.

Amazon S3 Server Access Logging allows you to capture and

store detailed records of requests made to your S3 buckets. The bucket from

which you enable Server Access Logging is referred to as the Source Bucket,

while the bucket where these logs are stored is called the Destination Bucket.

Note that the Source and Destination buckets can be the same, but it's

generally recommended to use separate buckets.

Detailed Log Information: The access logs include

details such as the requester’s identity, bucket name, request timestamp,

request action, response status code, and any errors encountered.

Default State: Server Access Logging is disabled by

default and must be manually enabled.

Destination Bucket Location: While the Destination

Bucket can be in the same AWS region as the Source Bucket, it is advisable to

use a different region for enhanced redundancy and disaster recovery.

Log Delivery Delay: There may be a delay in receiving

access logs in the Destination Bucket due to processing time.

Disabling Logs: Once enabled, you can disable Server Access Logging at any time as need

Amazon S3 Lifecycle Policies allow you to automate actions on objects based

on their age or status, optimizing storage costs and data management. With

Lifecycle Policies, you can:

Transition Objects: Automatically move objects to a

different storage class (e.g., from Standard to Glacier) to reduce costs.

Archive Objects: Transfer objects to long-term archival

storage such as Amazon S3 Glacier or Glacier Deep Archive.

Delete Objects: Automatically delete objects after a

specified time period to manage storage space and comply with data retention

policies.

Amazon S3 Event Notifications allow you to automatically trigger actions when

specific events occur in your S3 bucket. You can configure notifications to be

sent to the following AWS services:

Amazon SNS (Simple Notification Service): Sends

notifications to subscribers when an event is triggered.

Amazon SQS (Simple Queue Service): Sends event messages to

an SQS queue for processing.

AWS Lambda: Invokes

a Lambda function to process the event in real-time.

Bucket-Level

Configuration: Event notifications are configured at the bucket level,

and you can define multiple event types based on your requirements.

No Additional Charges:

There are no extra costs for enabling S3 Event Notifications.

Supported Event Types: You can configure notifications for events like object creation, deletion, or bucket-related changes.

AWS Elastic Compute Cloud -EC2

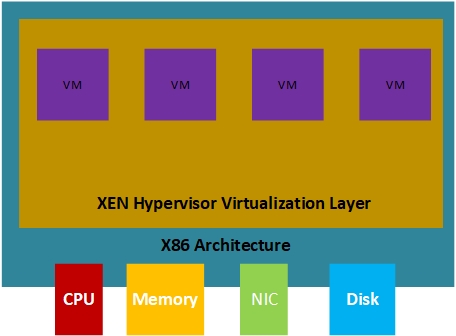

Elastic Compute Cloud (EC2) is a virtual Machine on AWS Host (Physical servers). AWS uses a XEN hypervisor to create virtualization and p...

Amazon Web Services Fundamentals

-

Before jumping into AWS Elastic Load balancer , first we need to understand, what is the Need of Load balancer in IT World. 1...

-

Before jumping into AWS Route 53 , first we need to understand, what is the Domain Name System(DNS) and how its work .

-

Before going to AWS VPC (virtual private Cloud), we need to understand the basic terms of Networking, which is being used in all Cloud ...

-

Before jumping into AWS CloudFront , first we need to understand, what is the Content Delivery Network(CDN), Static Web Content and Dyn...

-

Before going to AWS EBS, we need to understand the basic knowledge of storages, which is being used in Cloud environment and we are using...

-

Before we dive into AWS S3 (Simple Storage Service), it’s important to understand the basics of storage types: Block Storage, File Storage...

-

Elastic Compute Cloud (EC2) is a virtual Machine on AWS Host (Physical servers). AWS uses a XEN hypervisor to create virtualization and p...

-

Before jumping into AWS Auto Scaling, first we need to understand the meaning of scaling in Cloud world. Scaling means to meet the right ...

-

Before jumping into AWS Relational Database Service (RDS) , first we need to understand, what is the Relational Database and type of ...

-

This Blog covers all important points of EBS snapshot, EBS encryption and EBS sharing.